Now that it’s the summer and I have some time to myself, I’ve been thinking about how to boost the blog. Naturally I looked at my metrics. Turns out, no one really finds those “What I’ve been Reading / Playing / Watching” posts all that interesting. Shame. They’re quick and easy to do.

However, do you know what was the most popular post of the past year? My one rant I posted last February about AI. Surprise surprise, that’s an issue that only becoming more and more relevant, so I’m guessing that more and more people have doing searches based on it. So why not milk it, and write a bit more?

(My most popular posts of all time were the ones on Bioshock and on Tolkien‘s stories, so I’m going to have to think about how to do something similar to those eventually. Also on Arthur, since my Beaumains one likewise has some enduring appeal. I’ve got plans for the summer, as usual. We’ll see how long they last.)

An obvious flaw in my earlier post about AI was that it didn’t really have any solutions. No approaches. It was just, essentially, “this thing sucks and is going to make everything suck more.” Which, I mean, I stand by. AI is already making Google worse and causing problems in law, in media, and even in government. Thank you, Mr. Huckster-in-Health, for establishing the precedent of government agencies using phony AI-generated papers to advance agendas! That’ll go over well.

The thing is that’s not really all that helpful. “Everything sucks!” might be valid, but it doesn’t help us move forward. While it might be true that the modern age has an unnatural and unhealthy obsession with “The Next Big Thing” where they constantly hop on whatever’s new and shiny, every person can and should find a way to deal with, say, cell phones, even if they think cell phones are largely unhealthy and even negative.

As I said in my post on VR, whatever my concerns with the tech were, it was, inevitably, going to keep moving forward and see wide adoption. The same is true of AI. It’s going to keep being developed, it’s going to see wider and wider adoption. To some extent, our government actually NEEDS to invest in AI, if only to protect our cybersecurity systems from other countries’ AI’s. And companies are going to keep using it, because even if it’s worse, it’s cheaper, and that’s their bread and butter. And people are going to keep using it, because it’s easier, and people are lazy.

So what can salvage this? What are my predictions / suggestions for dealing with AI in a future age?

(1) Never talk to an AI assistant. Insist on talking to a person.

This isn’t just me being a grump and insisting on a boycott. AI assistants are terrible and companies shouldn’t use them. How do I know? McDonalds has tried using AI to take orders at select restaurants. It failed miserably. If AI isn’t capable of taking orders for burgers and fries, don’t let it tell you how to apply for health insurance.

Why do I say this? There’s been a recent story about a recovering addict who was told to “take a little meth to get through the week” by a therapy AI. UnitedHealth, one of the largest insurers in the United States, is currently under investigation for using a faulty AI that allegedly was designed to deny claims whenever possible. On a lighter note, Air Canada also found itself in a pickle when its AI assistant promised seats that weren’t actually available.

Then there was also a story, more than six months ago, about Sewell Setzer, a 14-year old who fell deeply in love with a Character.AI avatar–a program that essentially creates virtual friends that will have conversations on any–quite literally any–topic the user wants. In the case of Sewell, the teen fell into depression and committed suicide by charging at police with a knife.

An extreme example. But seriously–those “Friend AI” things that companies are talking about? Those are just creepy. I literally cannot think of a good application for those.

Hopefully, more lawsuits will eventually make AI assistants cost-prohibitive. They’re just too clearly problematic and buggy to be actually useful.

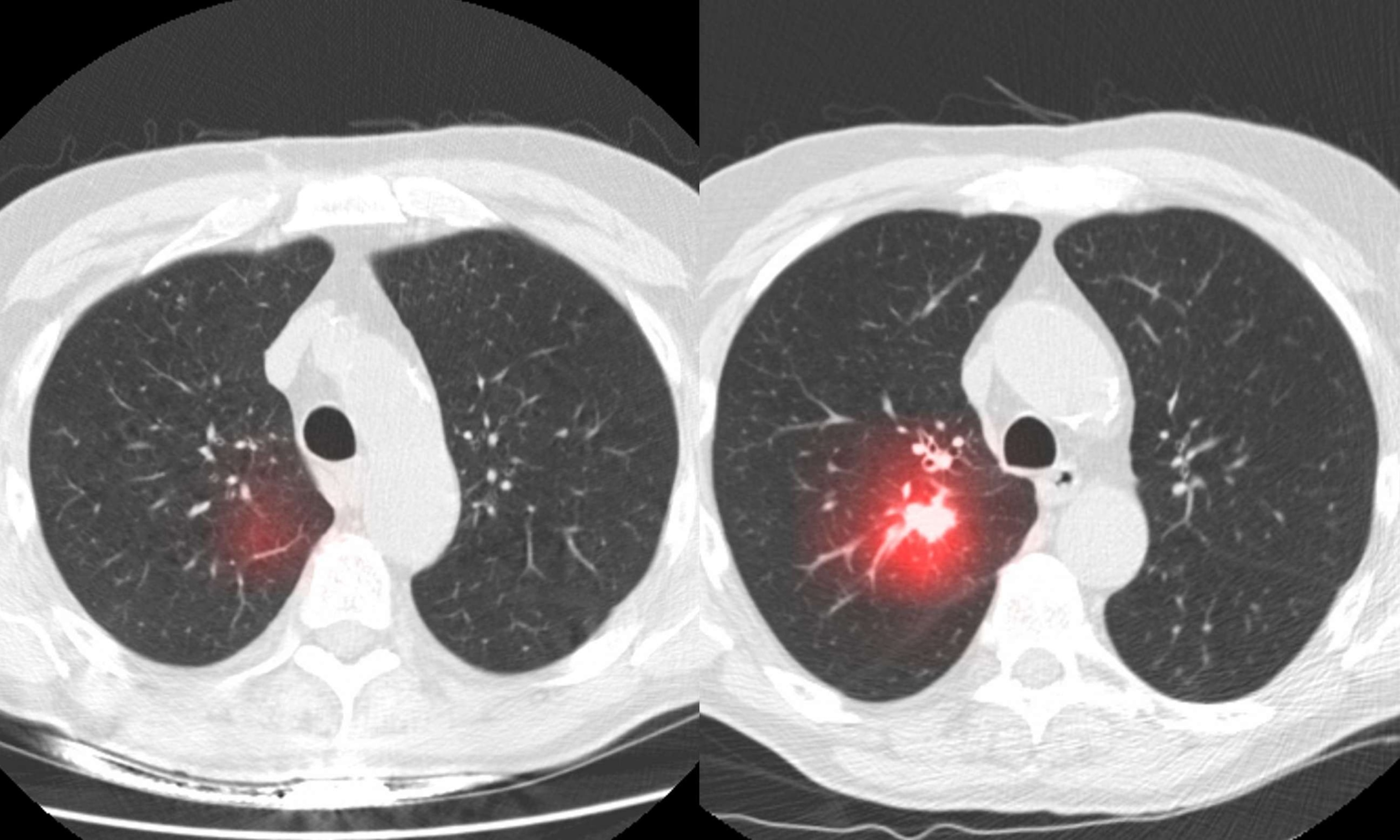

(2) Cancer screenings should start a lot sooner

One thing that even I recognize as a benefit is the way that AI has revolutionized medical science. Pattern recognition is a massive boon for examining millions of pieces of data and recognizing what insignificant blobs might truly be cancer and which might not. There’s massive potential for us to be able to recognize and treat cancer long before it’s a deadly danger, and we should take advantage of that.

What this means for doctors, I can’t say. I imagine people will always want a doctor to confirm what the AI has found, so doctors who analyze mammograms will have a job for quite some time, even if it’ll become mostly rubberstamping what the computer has said. Hopefully it will free up doctors to deal with work shortages and overworking problems at hospitals. That seems a bit too optimistic, though.

(3) Focus on more original animated productions. Avoid CGI fests. Do all live-action if you can.

AI is going to make CGI worse, and it’s going to make a lot of animated features start to look very generic. AI can’t completely replace filmmakers–every time you give a prompt it’ll give you a new main actor and a new background, so you can’t tell it “once more, with feeling,” lest you suddenly end up with a totally different character than in a last scene. The best it can do is animate the “in-betweens” of storyboard scenes, like was done on Across the SpiderVerse. (Interestingly, they themselves say AI shouldn’t be depended on) What that gives you, though, is very complicated action sequences with very generic and stiff animations in between.

Big-name studios are going to jump on this in a major way. Expect to see a glut of animated films with very complicated/confusing action scenes but little in the way of natural or fluid movement. Expect, too, for a lot of CGI films to start to rely on AI-generated backgrounds, resulting in movies that feel the same in undefinable ways. Expect also for a lot of “amateur” creatives trying to make it big in the movie world by generating AI films and flooding Youtube with them.

Don’t reward this behavior. Instead, seek out films that’ve put work into generating their own style, smoothly animating natural movement and long shots. Reward originality. Don’t fall for cheap Youtube amateur “animations” that are just autogenerated from a prompt. Follow dedicated artists and follow indie animators.

(4) Ban phones and electronics from schools. Completely.

Fortunately there’s already a strong groundswell moving this way for reasons independent of AI, but Chat GPT and Grok have made it more essential than ever. When your email account, or your messenger app, can literally answer every question and write a whole essay for you, phones are simply too big a liability. Schools can either ban phones, or they can become utterly useless.

But, I hear the inevitable tech apologist say, shouldn’t kids know how to function in the tech world? Didn’t you start this very blog because of a class about digital literacy?

Good memory fictitious reader! Indeed, for a time back in 2012 this blog was devoted nearly entirely to my ENGL 5310 class, on Digital Literacy and the need to adapt our learning to digital spaces. My first few years in teaching, I tried very hard to teach students how to use computers and to engrain in them digital learning skills. And I do think there would be use in a dedicated Computer Systems course that taught kids how to type on a keyboard and fill out spreadsheets–a course, oddly enough, that is bizarrely absent from many schools insisting that English teachers need to “get with the times” and tell students how to use AI. The assumption is that kids are so tech savvy they’ll naturally know how to use laptops, but in reality, most are more familiar with phones than laptops.

My response to those arguing that we need to teach kids how to use AI so they’ll be prepared for the working world that will use it is quite simple: No one has ever pretended that AI is hard to use. Indeed, everyone praising it has always emphasized how you need scarcely any training at all. Simply put, there’s no reason to teach kids how to use such an easy tool. What IS needed, though, is the fundamentals in grammar, spelling, and research, so that you can tell if an AI-generated document is flawed and terrible (as it frequently is). Even for the writer who insists that AI should do all the heavy lifting (as stupid an idea as that is), you need writing classes in editing to teach students how to pare down meaningless fluff and put in more succinct, personalized text.

Just so you know, I wrote all this BEFORE the bombshell MIT study was released showing that AI usage rots your brain and makes you a less creative, less intelligent person. That was obvious.

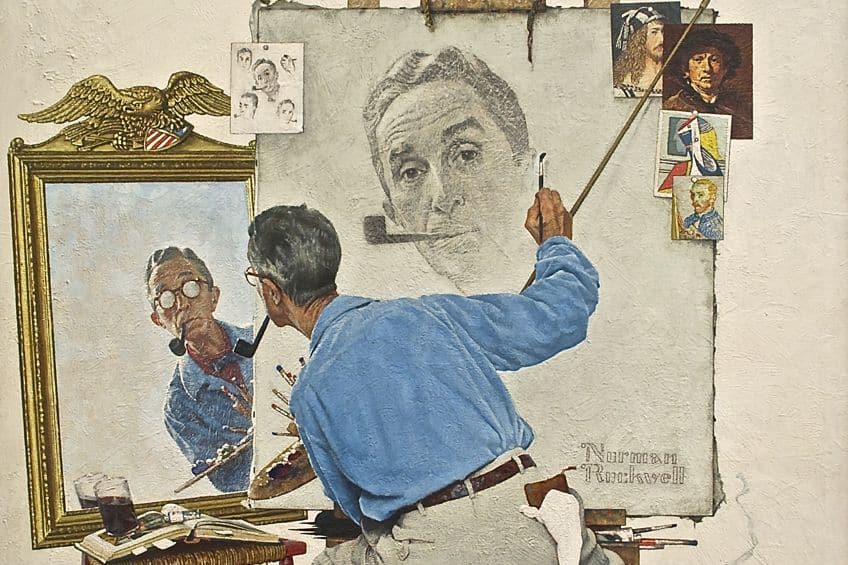

(5) Artists should–indeed must–avoid using AI

Anyone who wants to be taken seriously as an artist, anyone who wants to seriously develop their craft, anyone who cares about the expression of the human soul, anyone who cares about advancing the development of culture, must avoid using AI. Yes, AI will inevitably be adopted by the mass market culture. It will inevitably be terrible, but the market will not care, because it will be cheaper and help with profit margins.

Here’s the thing. Original artists are still going to stand out–probably more than ever–because of their individual vision. And original artists are going to be crucial, even to AI, because one thing that has been shown is that AI poisons itself. Training an artificial brain on mediocre artificial art just results in increasingly mediocre art. There’s even an argument that AI companies might want to hire professional artists to create original art specifically to improve the output of their AI.

So if you’re an artist, avoid using AI. Maybe use it for backgrounds and incidental details, but honestly, I think you’re better off avoiding even that. You got into this because you love making things. Make them.

(6) Guides and reviews will become more important than ever.

Despite all this, it’s possible–even likely–that books become swamped by AI bloat-books, and movies become overloaded with generic CGI fests, and even videogames become dominated with highly repetitive, bland, cheap nonsense. Thus the most crucial professional for the coming era is likely to be the reviewer, who can sift through all the crap and find the gems of individuality and hand-crafted goodness to highlight them to others. This was, in truth, already the case, but the coming age of AI nonsense will make it even more critical.

I feel like there ought to be some method of regulating and rating influencers, who currently are all over-the-place. It’s a situation liberating in its freedom, but not very illuminating for the average consumer. (Also prone to abuse) There’s probably genuinely a space for an influencer who rates influencers, or some sort of website at least who categorizes leading influencers by interest and quality of rating. Maybe it already exists.

(Which is why, even if no one reads those “What I’ve been playing” posts, I’m still going to make one for what I played during the Steam Next festival. Later. Probably next week.)

Regardless. Much as everyone hates critics and “influencers”, it might be time to find a good one to attach yourself to. They’re going to be critical for surviving the upcoming bloat of bad art.

(7) AI-aided search may be helpful for processes. It is not useful for facts.

I want to believe–no, really, I do–that there’s benefits to this new technology. I think that when we learn the proper places to use its pattern-recognition format, there will be immense boons. Like the early cancer detection mentioned above, for instance.

Similarly, I want to think that AI-summaries of search results will be useful in some ways. I certainly think it can be useful for computer engineers searching for a bit of coding or trying to develop a bit of code for a specific task. There is every possibility that AI might be good for this and other process-oriented searches. After all, processes rely on patterns. It might not be helpful for me to find 50 different recipes for Mac and Cheese, but an AI summary of what those 50 recipes have in common might be useful (though perhaps it might not be as uniquely tasty as some of them).

That being said, the process should always be double-checked against an original example. As the “AI assistant” examples above show, sometimes AI’s get it wrong. I cannot find the story now, but there was an account of AI telling a user that they genuinely could fly, if they truly and honestly believed they could as they jumped off the roof. Obviously, that process is faulty.

And here’s the other thing: while LLM’s are great at patterns, they suck at specifics. Everytime I see a “made by AI” presentation or video, there is usually some fact, statistic, or basic matter of reality that they have messed up on. One particularly egregious example featured a lyric from The Grateful Dead about welcoming the future–a lyric that a quick google search showed me did not actually exist. And let’s not forget about the guy who made a trailer full of nonexistent quotes.

AI sucks on specifics. But it’s good on pattern recognition. So use it on its strengths.

(8) Live-Form Storytelling will become the next blockbuster entertainment arena.

Perversely, as AI makes the production of our other forms of media cheaper and easier (and worse), people will likely gravitate toward the one area of storytelling where AI can’t dominate the medium. Live-form storytelling. Or in other words, table-top role-play gaming.

To be clear, AI can absolutely be used to supplement games like Dungeons and Dragons, Call of Cthulu, and other gaming systems. AI can be used to generate characters, locations, even plotlines, in rapid order. People have even speculated about using an AI “Dungeon Master” to run games. However, most players agree that having a computer run the game in this way would ruin the whole point of the role-play. AI-enabled “dungeon masters” will probably be a thing, and will likely even increase the accessibility of the hobby to people who were unable to try it before. But having a flesh-and-blood DM will always be superior and preferable.

Those two together means that a lot more people could find it easier to get into DnD, and simultaneously there might be a lot more people looking for a genuine DnD group to play with. (If so, this could also help with the loneliness epidemic)

It may seem odd to claim that DnD, of all things, might become the popular art form of the next generation, but in some ways it’s already happening. There are multiple podcasts and video series dedicated to nothing more than friends navigating dungeons together. The role-playing group “Dimension 20” recently sold out Madison Square Garden to an audience of fans. Live-action shows of role-players are becoming increasingly more common, and increasingly more popular.

Even if AI takes over character and setting creation, the core of the experience is always going to be the role-playing of the people involved in the game, and the random chance of the dice rolls. This makes the medium inherently unpredictable, and inherently different every time. In a world on the verge of being filled with mediocre slop, it is the one outlet that has to be unique and different, because the core of the game is the people involved in it.

I think we’ll start seeing DnD become bigger and bigger. I think streamed arena events like “Gauntlet at the Garden” will become bigger and bigger (and I hope they refine the system of the audience rolling to participate, because that system seems like it’s full of unrealized potential). In a way, I think it’s remarkably similar to the most ancient and original form of storytelling, where stories were a live event, read and enacted by a professional bard, or just someone at the table who was good at it.

Or maybe not.

The problem with any post like this is that the future is uniquely bizarre. There’s the famous saying: “I do not know if the future will be worse than we imagine, or better, but I do know it will be stranger.” For as many times as mankind has imagined the advent of artificial intelligent, no one ever foresaw it making its big debut as a consumer product to cheat on essays and make fake photos.

Who really knows what’s coming?